I’ve been listening to podcasts and reading articles about Quantum Computing for quite some time already and, oh dear, the more I listen and read about it, the more lost in the subject I feel. But that’s ok. Yes, that’s ok, because you will often read or hear when you start in this subject that if you think you understand Quantum Computing means that you don’t understand Quantum Computing.

I’m no Quantum physicist and my algebraic and logarithmic knowledge dates back from when I finished college, so bear with me while I try to explain what Quantum Computing is, hoping, as I said before, that you won’t think you understand it.

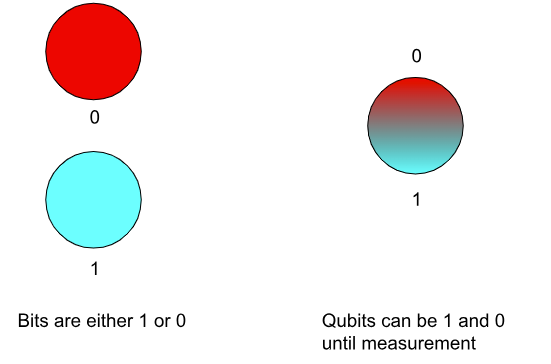

In traditional computing, bits are the base unit for computing. These bits return one result, which is 1 or 0 (can also be translated to true or false, yes or no, etc). Classical computers calculate these bits in what is known as serial processing, meaning that one operation must be completed before moving to the next one. This can be improved by using parallel processing to be able to perform simultaneous processing series at once. Now, in Quantum Computing the base unit is the Qubit. In the case of Qubits their value can be 1, 0 or (hold on to your seats) both at the same time, a state which is known as superposition,

where the value is not defined until measured. This changes the deterministic paradigm of classical computing to a probabilistic paradigm, meaning that a result can have multiple outputs given the same input, with a certain probability in each of these results of being the correct one. Here is where things get confusing and for a “classic engineer” as myself, my brain starts to hurt, since we always understood that being non-deterministic is not good.

Then, how do we know that a result from a Quantum computer calculation is correct? The easy answer will be: an answer is correct when the probability of getting this answer is above 50%. But it’s not that simple… With the help of quantum mechanics and how they are applied to Quantum computing, these calculations are supported with algorithms which basically ensure that 2/3 of the outcome results will be correct. If we think about running the same calculation multiple times, the result that repeats at least 2/3 of the times will be assumed to be correct.

And surprise! It’s not that simple again… Because the components of a Quantum Computer require to be in a very very cold environment (400 times below room temperature, just a little bit above absolute zero) and any small disturbance in the environment might erase Qubit’s data and cause errors. Think of any small increase in temperature as a way to mess your entire experiment up, not to mention the so-called Cosmic Rays, the nemesis of Quantum Computing. With that said, what do you think would be a good environment for placing a Quantum Computer? Yes, in space. Space temperature is just a little bit above absolute zero, temperature doesn’t change, no disturbance… except Cosmic Rays. Luckily, we don’t need to have these computers in space since scientists can reproduce space’s environment in Earth’s soil, making these whole things a bit easier (did I just say easier?).

There are a lot more of details related about the characteristics and behavior of Qubits, like entanglement (think of it like pairing Qubits to exponentially increase the computing power of the entangled Qubits), Quantum logic gates (building blocks of Quantum logic circuits… but more complicated and reversible) and several different algorithms to improve the efficiency of these calculations.

The important thing here is that any calculation done by a classical computer can be done by a Quantum computer, and vice versa, any calculation done by a Quantum computer can be done by a classical computer. Then what’s the point about overcomplicating things, adding probability to deterministic problems and sending computers to space? The main difference or improvement is calculation time. Saying that a classical computer can solve any calculation that a Quantum computer can solve is only a theoretical statement because for some of these problems we are trying to solve, a classical computer would take millions of years, probably more than the theoretical existence of the universe, while a Quantum computer would take minutes. Take for instance a factorisation problem, where we multiply two prime numbers and give the result to a computer to figure out what are the two numbers we used to get the given result. A classic computer would go through all prime number’s combinations, multiply them and check if it got the right number. Depending on the algorithm used, for big numbers this can take a very long time to be solved, also known as super-polynomial complexity. A Quantum computer could solve this problem in polynomial time in the worst case, thanks to the superposition of both states it can perform many calculations at once, exploring a huge number of possibilities simultaneously rather than sequentially as a Classic computer does.

Current cryptography is based on these limitations, using algorithms such as RSA to create encrypted keys that would require super polynomial times to be decrypted (using algorithms such as Shor’s algorithm), making it theoretically impossible by actual computers. Quantum computers are a threat to these cryptographic methods since they will be able to decrypt this kind of cryptography methods in polynomial time in the worst case, making the strongest actual cryptographic key a trivial problem to solve. The good thing is that we are not there yet and there are different theories and opinions about how long it will take to reach that point based on how long we will be able to create Quantum computers to have the sufficient amount of Qubits to break keys such as RSA SHA 256. By the way, this is the base algorithm that Bitcoin uses to encrypt and encode its information, which opens another conversation about the longevity of Bitcoin based on the evolution of Quantum computing.

To wrap things up, Quantum Computing has been around since 1998 and it’s still in its early stages, with lots of uncertainty about how fast it will evolve and many probabilities to be adjusted. But if we think about its non deterministic nature, do we really need to crack everything down to introduce this technology into our lives or will we get used to living in this new constant uncertainty and non-deterministic world?

Here is a list of the different frameworks and SDKs available to start experimenting and developing using Quantum Computing:

- Qiskit: Open Source framework developed by IBM, one of the first published and a very good option for beginners. It uses Python and offers different tools for circuit design, simulation and execution. It has very good documentation and tutorials, has one of the largest communities and integrates with many Python-based libraries and platforms.

- Cirq: Open Source framework developed by Google, probably the main competitor of Qiskit and IBM in the Quantum Computing race. It has a similar offering as Qiskit, it also uses Python and it’s probably the main alternative for beginners.

- Microsoft Quantum Development Kit: Another Open Source framework, in this case developed by Microsoft, using the Q# language, a Quantum specific adaptation of the C# language. It’s also a good option for beginners due to its good documentation and tutorials, though it’s a more oriented to developers that are familiar with Microsoft tools and languages, such as C# and Azure.

- Forest and PyQuil: Forest is also an Open Source SDK created by Rigetti, which includes the Python library PyQuil to write and execute Quantum programs in different machines such as Rigetti’s Quantum Computers. Being led by a less known company compared to the others, it has a smaller community and documentation and it’s more oriented to the use of their own hardware, but that community, support and tools are continuously growing.

- PennyLane: Open Source library for Quantum Machine Learning, Optimization and Quantum Computing. Its usage requires a good foundation on Machine Learning and its more oriented to this area rather than general computing. It also uses Python and can be executed in different Quantum hardware such as IBM’s and Google’s quantum processors. This is the recommended option for Machine Learning engineers and Data Scientists.

I hope after reading this you still don’t understand how Quantum Computing works because it will mean that you are one step closer to understanding how it really works.

PS: If you want to see a more visual representation of everything discussed above, I recommend you to go and play Google’s Qubit Game. It’s quite fun and a very good simplification on how a Quantum Computer works 🙂

If you find this article interesting, you still have some doubts about the content or just would like to have a discussion with us, don`t hesitate and reach us out here.