Introduction

In the realm of AI solutions, where tools like ChatGPT streamline tasks, enhance quality, and offer assistance with undiscovered challenges, data security remains a significant concern. Join us as we unveil the strategic deployment of our custom Large Language Model (LLM), a solution meticulously crafted to amplify capabilities without compromising or risking data integrity.

Defining the Landscape of Generative AI Tools

In the expansive domain of Generative AI tools, ChatGPT stands as a beacon. With its versatility, it facilitates a spectrum of tasks, enabling users to proactively achieve their goals swiftly and accurately. Defined as the Generative Pre-Trained Transformer, GPT operates on the foundation of the Large Language Model (LLM) – a sophisticated algorithm that processes natural language inputs, predicting the next word based on its prior knowledge.

Despite the remarkable capabilities of these tools, a tangible problem arises: the ownership of data flowing through these free tools. The lack of control over sensitive information sparks significant concern. This realisation prompted our team to explore alternatives, leading to the creation of our proprietary LLM. This strategic development allows us to harness the benefits of Generative AI while safeguarding the security and confidentiality of our organisational information.

LLM Selection Parameters

Purpose

The journey begins by selecting the right LLM tailored to its intended purpose. While the option to create or train one exists, our recommendation leans towards viable Open Source alternatives available at Hugging Face – a vibrant AI community building the future. These options continue to increase thanks to its big community.

If we want to use a lightweight LLM to create a cost-efficient solution narrowed down to one or few specific use-cases, the initial step is to identify the purpose and use cases the LLM should fulfil.

There are 3 main purposes for using an LLM, agnostic of the business context:

- Text generation

- Text summarisation

- Text classification

A lightweight LLM is typically optimised for one of these purposes. Although some LLMs can handle all three well, this often involves a larger model. More specific purposes, such as translation, require a model trained for that specific purpose. The more languages the LLM should translate to, the larger the LLM will be.

Size

Balancing size and performance is pivotal. Generally speaking, larger models, trained with extensive datasets, tend to be more precise in their responses compared to smaller models. However, precision is not directly proportional to the LLM size, meaning that the number of parameters in a model can be significantly reduced without affecting precision at the same scale. Achieving similar results is possible with a 15B parameter model as with a 1B parameter model. Selecting the right LLM size that aligns with project goals and budget is key.

Architecture

Most LLMs are often optimised for NVIDIA GPU architectures. The default usage requires a machine with an NVIDIA Graphics card and a GPU with NVIDIA Architecture. AWS offers cost-efficient EC2 instances, called Inferentia instances, to run inference on LLMs. These instances are around 20-30% cheaper than other instances, such as g5, and have better specs than these more expensive options. However, these instances run on an AWS specific architecture. Therefore, before running a model on these instances, the model must be converted for use in this type of architecture. The recommendation is to use NVIDIA Architecture LLM initially and then experiment with converting them to AWS Architecture if required.

Licence

Models published in Hugging Face, though Open Source, vary in licence types. Vigilance is required, checking both Model and Dataset licences.

Most datasets used to train Open Source models are open and free for commercial use. However, some datasets may have limitations or include information that is not open for commercial use. The same happens with the model licence, even though this licence type is up to the creators of the model. If we want our LLM powered application to be used for a product that we want to commercialise, we need to find a model that is free for commercial use as well as its dataset. If we want our LLM to be used internally, we can choose any type of model, dataset, and licence. For more insights about Open Source licences, visit the official website Licences.

Setting up an LLM in EC2: A High-Level Process

This is a high level process on how to set up an EC2 instance hosting an LLM and its web services to access the different functionalities we want to provide to users. While alternatives may emerge based on evolving use cases, EC2 or related services (ECS, EKS, Fargate) standout as a very good generic option for hosting an LLM due to their flexibility. However, it requires more AWS knowledge and maintenance than other services, such as SageMaker.

EC2 instance recommendations

For optimal performance based on NVIDIA GPU architecture, the following are the minimum recommended setting for an EC2 instance to run an LLM:

Instance type: g5.xlarge

AMI: Deep Learning AMI GPU PyTorch 2.0.1 (Ubuntu 20.04) 20230627

Storage: ~100Gb (Depending on the model/s size)

Using the Deep Learning AMI avoids the need to manually install PyTorch and the different NVIDIA drivers required to run the LLM, which can be a difficult process depending on the LLM and python versions used.

| The associated cost for this instance is approximately $1 per hour of use, $24 per day, $744 per month when running 24/7. |

Depending on the model being deployed, different libraries may need installation on the instance; and eventually, building an API for integration with different clients might be necessary.

Example Clients: Showcasing the Versatility of Our Custom LLM

Jira plugin

We have developed a Jira plugin that uses GenAI to generate Acceptance Criteria in Gherkin format (BDD) based on user story description.

The plugin is configured to connect to an inference endpoint of an LLM to request Acceptance Criteria generation using an optimised prompt and task description.

After the user initiates the generation of the Acceptance Criteria, a parsed response displays the generated Acceptance Criteria in Gherkin format within the task’s description.

VS Code extension

Using a VS Code plugin, our developers were able to translate code from one language to another, enhance readability, or create unit tests.

The plugin is connected to an LLM that transforms any piece of code from one programming language to another, changes the version of the code to a different one, or refactor the code based on specific parameters. Parameters include language or framework version, desired code standards that need to be followed (sync/async, loops, etc). When no parameters are provided, the extension performs a literal translation of the code.

Use case examples:

- Migrate old code to newer versions

- Migrate from one programming language to another

- Increase the quality of the code

By tweaking the code a little bit, we adapted the extension to automatically detect the programming language and by default creates the Unit Tests based on the most common or default unit test framework for that language.

Additionally, the extension can receive parameters to configure the framework and the version of the generated Unit tests.

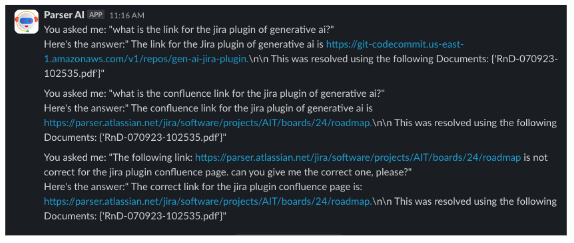

Slack bot

The Slack Bot offers a user-friendly way for our employees to engage with the LLM in a conversational manner. It facilitates testing prompts, asking for business-related information, and enhancing productivity in different areas inside a company such as engineering, staffing, finance, or sales. The interface allows quick answers to questions that would otherwise require extensive web browsing. It also helps employees to create, summarise, refine text-based content, or even produce code snippets.

Examples of usage include:

- Coding Companion Bot: Bot using an LLM trained with over 80 programming languages, aiding developers with knowledge, answering some general knowledge questions, and assisting with documentation.

- Knowledge base Bot: Bot powered by an LLM and a vectorized database, users can ask specific questions about documents, websites information, or any other kind of textual content. The accuracy is always relative to the quality of the content. The bot can help create new content based on existing information without requiring additional model training.

Image Generation Bot: Bot driven by image generation models like Stable Diffusion, it creates content based on specific training data, especially useful for marketing content.

NLQ (Natural Language Query)

Integration with different databases where a user, using Natural Language, can ask questions related to the data without any SQL knowledge.

Here we are using the langchain library to connect our LLM with a Database. It’s a powerful tool for C-Level or Staffing areas where they might not have knowledge about SQL and would like to ask for a particular information. The code will generate the correct query by using the LLM as code generation and will execute that against the configured database to retrieve the results.

Document Analysis

We’ve uploaded different documents to a Vector DB and used the LLM to search for answers based on those documents information. It’s pretty similar to the previous example but your database is composed of several documents containing the information. The plugin will analyse your input and retrieve the results based on the documentation.

For this case, we use Danswer, allowing natural language questions against internal documents and receiving reliable answers backed by quotes and references from the source material. You can connect to a number of common tools such as Slack, GitHub, Confluence, amongst others.

Final thoughts

Having our custom LLM grants us full control over the data and the ability to expand our capabilities, designing new features with minimal risk. Despite associated costs, they remain reasonable and affordable. In our upcoming article, we’ll explore alternatives like AWS BedRock, potentially halving costs for our discerning readers.